operator简介

原理

operator 是一种 kubernetes 的扩展形式,利用自定义资源对象(Custom Resource)来管理应用和组件,允许用户以 Kubernetes 的声明式 API 风格来管理应用及服务。

- CRD (Custom Resource Definition): 允许用户自定义 Kubernetes 资源,是一个类型;

- CR (Custom Resourse): CRD 的一个具体实例;

- webhook: 它本质上是一种 HTTP 回调,会注册到 apiserver 上。在 apiserver 特定事件发生时,会查询已注册的 webhook,并把相应的消息转发过去。按照处理类型的不同,一般可以将其分为两类:一类可能会修改传入对象,称为 mutating webhook;一类则会只读传入对象,称为 validating webhook。

- 工作队列: controller 的核心组件。它会监控集群内的资源变化,并把相关的对象,包括它的动作与 key,例如 Pod 的一个 Create 动作,作为一个事件存储于该队列中;

- controller :它会循环地处理上述工作队列,按照各自的逻辑把集群状态向预期状态推动。不同的 controller 处理的类型不同,比如 replicaset controller 关注的是副本数,会处理一些 Pod 相关的事件;

- operator:operator 是描述、部署和管理 kubernetes 应用的一套机制,从实现上来说,可以将其理解为 CRD 配合可选的 webhook 与 controller 来实现用户业务逻辑,即 operator = CRD + webhook + controller。

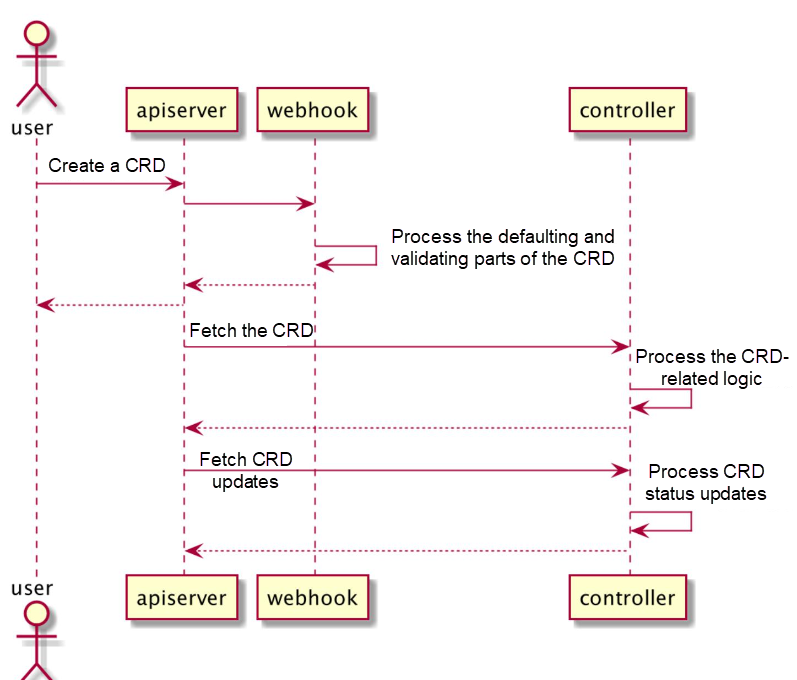

工作流程

- 用户创建一个自定义资源 (CRD);

- apiserver 根据自己注册的一个 pass 列表,把该 CRD 的请求转发给 webhook;

- webhook 一般会完成该 CRD 的缺省值设定和参数检验。webhook 处理完之后,相应的 CR 会被写入数据库,返回给用户;

- 与此同时,controller 会在后台监测该自定义资源,按照业务逻辑,处理与该自定义资源相关联的特殊操作;

- 上述处理一般会引起集群内的状态变化,controller 会监测这些关联的变化,把这些变化记录到 CRD 的状态中。

构建工具

两者实际上并没有本质的区别,它们的核心都是使用官方的 controller-tools 和 controller-runtime。

operator SDK

operator framework,是 CoreOS 公司开发和维护的用于快速创建 operator 的工具,可以帮助我们快速构建 operator 应用。

安装

#下载operator sdk

wget https://github.com/operator-framework/operator-sdk/releases

创建项目

mkdir -p /root/go/src/github.com/memcached-operator

cd /root/go/src/github.com/memcached-operator

operator-sdk init --domain example.com --repo github.com/memcached-operator

创建API

operator-sdk create api --group cache --version v1alpha1 --kind Memcached --resource --controller

项目结构

# tree

.

├── api

│ └── v1alpha1

│ ├── groupversion_info.go

│ ├── memcached_types.go

│ └── zz_generated.deepcopy.go

├── bin

│ └── controller-gen

├── config

│ ├── crd

│ │ ├── kustomization.yaml

│ │ ├── kustomizeconfig.yaml

│ │ └── patches

│ │ ├── cainjection_in_memcacheds.yaml

│ │ └── webhook_in_memcacheds.yaml

│ ├── default

│ │ ├── kustomization.yaml

│ │ ├── manager_auth_proxy_patch.yaml

│ │ └── manager_config_patch.yaml

│ ├── manager

│ │ ├── controller_manager_config.yaml

│ │ ├── kustomization.yaml

│ │ └── manager.yaml

│ ├── manifests

│ │ └── kustomization.yaml

│ ├── prometheus

│ │ ├── kustomization.yaml

│ │ └── monitor.yaml

│ ├── rbac

│ │ ├── auth_proxy_client_clusterrole.yaml

│ │ ├── auth_proxy_role_binding.yaml

│ │ ├── auth_proxy_role.yaml

│ │ ├── auth_proxy_service.yaml

│ │ ├── kustomization.yaml

│ │ ├── leader_election_role_binding.yaml

│ │ ├── leader_election_role.yaml

│ │ ├── memcached_editor_role.yaml

│ │ ├── memcached_viewer_role.yaml

│ │ ├── role_binding.yaml

│ │ └── service_account.yaml

│ ├── samples

│ │ ├── cache_v1alpha1_memcached.yaml

│ │ └── kustomization.yaml

│ └── scorecard

│ ├── bases

│ │ └── config.yaml

│ ├── kustomization.yaml

│ └── patches

│ ├── basic.config.yaml

│ └── olm.config.yaml

├── controllers

│ ├── memcached_controller.go

│ └── suite_test.go

├── Dockerfile

├── go.mod

├── go.sum

├── hack

│ └── boilerplate.go.txt

├── main.go

├── Makefile

└── PROJECT

17 directories, 43 files

测试

将 CRD 安装到k8s集群中

make install

部署operator

make deploy

安装CR实例

kubectl apply -f config/samples/cache_v1alpha1_memcached.yaml

卸载operator

make undeploy

卸载CRD

make uninstall

kubebuilder

安装

# 下载 kubebuilder

wget https://github.com/kubernetes-sigs/kubebuilder/releases/download/v2.3.1/kubebuilder_2.3.1_linux_amd64.tar.gz

创建项目

mkdir -p /root/go/src/github.com/testcrd-controller

cd /root/go/src/github.com/testcrd-controller

#--domain 指定了后续注册 CRD 对象的 Group 域名

kubebuilder init --domain edas.io

创建API

kubebuilder create api --group apps --version v1alpha1 --kind Application

参数说明:

- group 加上之前的 domian 即此 CRD 的 Group: apps.edas.io;

- version 一般分三种,按社区标准:

- v1alpha1: 此 api 不稳定,CRD 可能废弃、字段可能随时调整,不要依赖;

- v1beta1: api 已稳定,会保证向后兼容,特性可能会调整;

- v1: api 和特性都已稳定;

- kind: 此 CRD 的类型,类似于 Service 的概念;

项目结构

# ls

api bin config controllers Dockerfile go.mod go.sum hack main.go Makefile PROJECT

# tree

.

├── api

│ └── v1alpha1

│ ├── application_types.go

│ ├── groupversion_info.go

│ └── zz_generated.deepcopy.go

├── bin

│ └── manager

├── config

│ ├── certmanager

│ │ ├── certificate.yaml

│ │ ├── kustomization.yaml

│ │ └── kustomizeconfig.yaml

│ ├── crd

│ │ ├── bases

│ │ │ └── apps.edas.io_applications.yaml

│ │ ├── kustomization.yaml

│ │ ├── kustomizeconfig.yaml

│ │ └── patches

│ │ ├── cainjection_in_applications.yaml

│ │ └── webhook_in_applications.yaml

│ ├── default

│ │ ├── kustomization.yaml

│ │ ├── manager_auth_proxy_patch.yaml

│ │ ├── manager_webhook_patch.yaml

│ │ └── webhookcainjection_patch.yaml

│ ├── manager

│ │ ├── kustomization.yaml

│ │ └── manager.yaml

│ ├── prometheus

│ │ ├── kustomization.yaml

│ │ └── monitor.yaml

│ ├── rbac

│ │ ├── application_editor_role.yaml

│ │ ├── application_viewer_role.yaml

│ │ ├── auth_proxy_client_clusterrole.yaml

│ │ ├── auth_proxy_role_binding.yaml

│ │ ├── auth_proxy_role.yaml

│ │ ├── auth_proxy_service.yaml

│ │ ├── kustomization.yaml

│ │ ├── leader_election_role_binding.yaml

│ │ ├── leader_election_role.yaml

│ │ ├── role_binding.yaml

│ │ └── role.yaml

│ ├── samples

│ │ └── apps_v1alpha1_application.yaml

│ └── webhook

│ ├── kustomization.yaml

│ ├── kustomizeconfig.yaml

│ └── service.yaml

├── controllers

│ ├── application_controller.go

│ └── suite_test.go

├── Dockerfile

├── go.mod

├── go.sum

├── hack

│ └── boilerplate.go.txt

├── main.go

├── Makefile

└── PROJECT

测试

将 CRD 安装到k8s集群中

make install

本地运行controller

make run

安装CR实例

kubectl apply -f config/samples/

卸载CRD

make uninstall

创建webhook

kubebuilder create webhook --group apps --version v1alpha1 --kind Application --defaulting --programmatic-validation

案例

Mongodb

安装mongodb operator

安装mongodb实例

---

apiVersion: mongodbcommunity.mongodb.com/v1

kind: MongoDBCommunity

metadata:

name: example-mongodb

spec:

members: 1

type: ReplicaSet

version: "4.2.6"

security:

authentication:

modes: ["SCRAM"]

users:

- name: my-user

db: admin

passwordSecretRef: # a reference to the secret that will be used to generate the user's password

name: my-user-password

roles:

- name: clusterAdmin

db: admin

- name: userAdminAnyDatabase

db: admin

scramCredentialsSecretName: my-scram

additionalMongodConfig:

storage.wiredTiger.engineConfig.journalCompressor: zlib

# the user credentials will be generated from this secret

# once the credentials are generated, this secret is no longer required

---

apiVersion: v1

kind: Secret

metadata:

name: my-user-password

type: Opaque

stringData:

password: "123456"

使用mongodb

# kubectl exec -it example-mongodb-0 -n mongodb bash

I have no name!@example-mongodb-0:/$ mongo

MongoDB shell version v4.2.6

connecting to: mongodb://127.0.0.1:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("51607c54-bc7d-4006-8ef6-a9ff0a2e767a") }

MongoDB server version: 4.2.6

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

2021-08-23T03:21:29.304+0000 I STORAGE [main] In File::open(), ::open for '//.mongorc.js' failed with Permission denied

example-mongodb:PRIMARY> use admin

switched to db admin

example-mongodb:PRIMARY> db.auth('my-user', '123456')

1

example-mongodb:PRIMARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

example-mongodb:PRIMARY> exit

ECK

Elastic Cloud on Kubernetes(ECK)是一个 Elasticsearch Operator。 ECK 使用 Kubernetes Operator 模式构建而成,需要安装在您的 Kubernetes 集群内,其功能绝不仅限于简化 Kubernetes 上 Elasticsearch 和 Kibana 的部署工作这一项任务。ECK 专注于简化所有后期运行工作,例如:

- 管理和监测多个集群

- 轻松升级至新的版本

- 扩大或缩小集群容量

- 更改集群配置

- 动态调整本地存储的规模(包括 Elastic Local Volume(一款本地存储驱动器))

- 备份

安装 ECK 对应的 Operator 资源对象

#kubectl apply -f https://download.elastic.co/downloads/eck/1.6.0/all-in-one.yaml

Elasticsearch

创建单节点Elasticsearch 集群

cat <<EOF | kubectl apply -f -

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: quickstart

spec:

version: 7.13.2

nodeSets:

- name: default

count: 1

config:

node.store.allow_mmap: false

EOF

创建三节点Elasticsearch的集群

宿主机设置一下sysctl -w vm.max_map_count=262144

---

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: quickstart

spec:

version: 7.13.2

http:

service:

spec:

type: NodePort

nodeSets:

- name: default

count: 3

podTemplate:

spec:

volumes:

- name: elasticsearch-data

emptyDir: {}

查看es集群状态

# kubectl get elastic

NAME HEALTH NODES VERSION PHASE AGE

elasticsearch.elasticsearch.k8s.elastic.co/quickstart green 3 7.13.2 Ready 2m4s

# kubectl get pods

NAME READY STATUS RESTARTS AGE

quickstart-es-default-0 1/1 Running 0 2m13s

quickstart-es-default-1 1/1 Running 0 2m13s

quickstart-es-default-2 1/1 Running 0 2m13s

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 22d

quickstart-es-default ClusterIP None <none> 9200/TCP 2m23s

quickstart-es-http ClusterIP 10.43.0.51 <none> 9200/TCP 2m24s

quickstart-es-transport ClusterIP None <none> 9300/TCP 2m24s

访问es集群

# PASSWORD=$(kubectl get secret quickstart-es-elastic-user -o go-template='{{.data.elastic | base64decode}}')

# curl -u "elastic:$PASSWORD" -k "https://xx.xx.xx.xx:port"

{

"name" : "quickstart-es-default-2",

"cluster_name" : "quickstart",

"cluster_uuid" : "_33mFEetTrCjkjKKJlbCCQ",

"version" : {

"number" : "7.13.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "4d960a0733be83dd2543ca018aa4ddc42e956800",

"build_date" : "2021-06-10T21:01:55.251515791Z",

"build_snapshot" : false,

"lucene_version" : "8.8.2",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

#### kibana

##### 创建kibana

```yaml

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: testkibana

spec:

version: 7.13.2

count: 1

elasticsearchRef:

name: quickstart

查看kibna状态

# kubectl get kibanas.kibana.k8s.elastic.co

NAME HEALTH NODES VERSION AGE

testkibana green 1 7.13.2 68s

# kubectl get svc|grep kibana

testkibana-kb-http ClusterIP 10.43.50.88 <none> 5601/TCP 87s

访问kibana服务

配置kibana svc代理,将5601端口映射

# echo $(kubectl get secret quickstart-es-elastic-user -o=jsonpath='{.data.elastic}' | base64 --decode)

r0V8YQ1L51UavQOh8St8L913

#访问kibana页面:

https://xx.xx.xx.xx:5601/

用户名:elastic

密码:r0V8YQ1L51UavQOh8St8L913